At the recent Mashcat event I volunteered to do a session called ‘making the most of MARC’. What I wanted to do was demonstrate how some of the current ‘resource discovery’ software are based on technology that can really extract value from bibliographic data held in MARC format, and how this creates opportunities for in both creating tools for users, and also library staff.

One of the triggers for the session was seeing, over a period of time, a number of complaints about the limitations of ‘resource discovery’ solutions – I wanted to show that many of the perceived limitations were not about the software, but about the implementation. I also wanted to show that while some technical knowledge is needed, some of these solutions can be run on standard PCs and this puts the tools, and the ability to experiment and play with MARC records, in the grasp of any tech-savvy librarian or user.

Many of the current ‘resource discovery’ solutions available are based on a search technology called Solr – part of a project at the Apache software foundation. Solr provides a powerful set of indexing and search facilities, but what makes it especially interesting for libraries is that there has been some significant work already carried out to use Solr to index MARC data – by the SolrMARC project. SolrMARC delivers a set of pre-configured indexes, and the ability to extract data from MARC records (gracefully handling ‘bad’ MARC data – such as badly encoded characters etc. – as well). While Solr is powerful, it is SolrMARC that makes it easy to implement and exploit in a library context.

SolrMARC is used by two open source resource discovery products – VuFind and Blacklight. Although VuFind and Blacklight have differences, and are written in different languages (VuFind is PHP while Blacklight is Ruby), since they both use Solr and specifically SolrMARC to index MARC records the indexing and search capabilities underneath are essentially the same. What makes the difference between implementations is not the underlying technology but the configuration. The configuration allows you to define what data, from which part of the MARC records, goes into which index in Solr.

The key SolrMARC configuration file is index.properties. Simple configuration can be carried out in one line for example (and see the SolrMARC wiki page on index.properties for more examples and details):

title_t = 245a

This creates searchable ‘title’ index from the contents of the 245 $$a field. If you wanted to draw information in from multiple parts of the MARC record, this can be done easily – for example:

title_t = 245ab:246a

Similarly you can extract characters from the MARC ‘fixed fields’:

language_facet = 008[35-37]:041a:041d

This creates a ‘faceted’ index (for browsing and filtering) for the language of the material based on the contents of 008 chars 35-37, as well as the 041 $$a and $$d.

As well as making it easy to take data from specific parts of the MARC record, SolrMARC also comes pre-packaged with some common tasks you might want to carry out on a field before adding to the index. The three most common are:

Removing trailing punctuation – e.g.

publisher_facet = custom, removeTrailingPunct(260b)

This does exactly what it says – removes any punctuation at the end of the field before adding to the index

Use data from ‘linked’ fields – e.g.

title_t = custom, getLinkedFieldCombined(245a)

This takes advantage of the ability in MARC to link MARC fields to alternative representations of the same text – e.g. for the same text in a different language.

Map codes/abbreviations to proper language – e.g.

format = 000[6-7], (map.format)

Because the ‘format’ in the MARC leader (represented here by ‘000’) is represented as a code when creating a search index it makes sense to translate this into more meaningful terms. The actual mapping of terms can either be done in the index.properties file, or in separate mapping files. The mapping for the example above looks like:

map.format.aa = Book

map.format.ab = Serial

map.format.am = Book

map.format.as = Serial

map.format.ta = Book

map.format.tm = Book

map.format = Unknown

These (and a few other) built in functions make it easy to index the MARC record, but you may still find that they don’t cover exactly what you want to achieve. For example, they don’t allow for ‘conditional’ indexing (such as ‘only index the text in XXX field when the record is for a Serial), or if you want to extract only specific text from a MARC subfield.

Happily, you can extend the indexing by writing your own scripts which add new functions. There are a couple of ways of doing this, but the easiest is to write ‘bean shell’ scripts (basically Java) which you can then call from the index.properties file. Obviously we are going beyond simple configuration and into programming at this point, but with a little knowledge you can start to work the data from the MARC record even harder.

Once you’ve written a script, you can use it from index.properties as follows:

format = script(format-full.bsh), getFormat

This uses the getFormat function from the format-full.bsh script. In this case I was experimenting with extracting not just basic ‘format’ information, but also more granular information on the type of content as described in the 008 field – but the meaning of the 008 field varies based on type of material being catalogue so you get code like:

f_000 = f_000.toUpperCase();

if (f_000.startsWith("C"))

{

result.add("MusicalScore");

String formatCode = indexer.getFirstFieldVal(record, null, "008[18-19]").toUpperCase();

if (formatCode.equals("BT")) result.add("Ballet");

if (formatCode.equals("CC")) result.add("ChristianChants");

if (formatCode.equals("CN")) result.add("CanonsOrRounds");

if (formatCode.equals("DF")) result.add("Dances");

if (formatCode.equals("FM")) result.add("FolkMusic");

if (formatCode.equals("HY")) result.add("Hymns");

if (formatCode.equals("MD")) result.add("Madrigals");

if (formatCode.equals("MO")) result.add("Motets");

if (formatCode.equals("MS")) result.add("Masses");

if (formatCode.equals("OP")) result.add("Opera");

if (formatCode.equals("PT")) result.add("PartSongs");

if (formatCode.equals("SG")) result.add("Songs");

if (formatCode.equals("SN")) result.add("Sonatas");

if (formatCode.equals("ST")) result.add("StudiesAndExercises");

if (formatCode.equals("SU")) result.add("Suites");

}

else if (f_000.startsWith("D"))

(I’ve done an example file for parsing out detailed format/genre details which you can get from https://github.com/ostephens/solrmarc-indexproperties/blob/master/index_scripts/format-full.bsh – but although more granular it still doesn’t exploit all possible granularity from the MARC fixed fields)

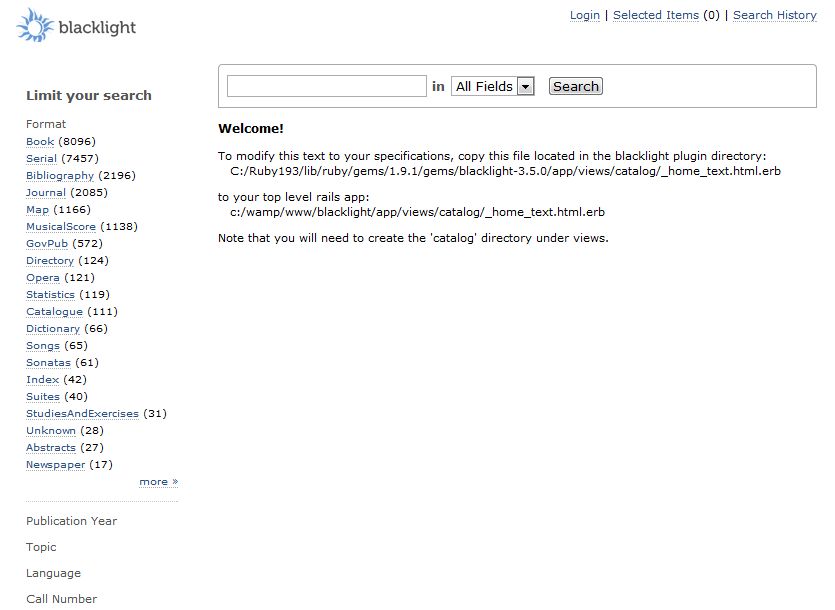

Once you’ve configured the indexing, you run this over a file of MARC records. The screenshot here shows a Blacklight with a faceted ‘format’ which I created using a custom indexing script

These tools excite me for a couple of reasons:

- A shared platform for MARC indexing, with a standard way of programming extensions gives the opportunty to share techniques and scripts across platforms – if I write a clever set of bean shell scripts to calculate page counts from the 300 field (along the lines demonstrated by Tom Meehan in another Mashcat session), you can use the same scripts with no effort in your SolrMARC installation

- The ability to run powerful, but easy to configure, search tools on standard computers. I can get Blacklight or VuFind running on a laptop (Windows, Mac or Linux) with very little effort, and I can have a few hundred thousand MARC records indexed using my own custom routines and searchable via an interface I have complete control over

While the second of these points may seem like it’s a pretty niche market – and of course it is – we are seeing increasingly librarians and scholars making use of this kind of solution, especially in the digital humanities space. These solutions are relatively cheap and easy to run. Indexing a few hundred thousand MARC records takes a little time, but we are talking tens of minutes, not hours – you can try stuff, delete the index and try something else. You can focus on drawing out very specific values from the MARC record and even design specialist indexes and interfaces for specific kinds of material – this is not just within the grasp of library services, but the individual researcher.

In the pub after the main mashcat event had finished, we were chatting about the possibilities offered by Blacklight/VuFind and SolrMARC. I used a phrase I know I borrowed from someone else, but I don’t know who – ’boutique search’ – highly customised search interfaces that server a specific audience or collection.

A final note – we have the software, what we need is data – unless more libraries followed the lead of Harvard, Cambridge and others and make MARC records available to use, any software which produces consumes MARC records is of limited use …

3 thoughts on “MaRC and SolrMaRC”