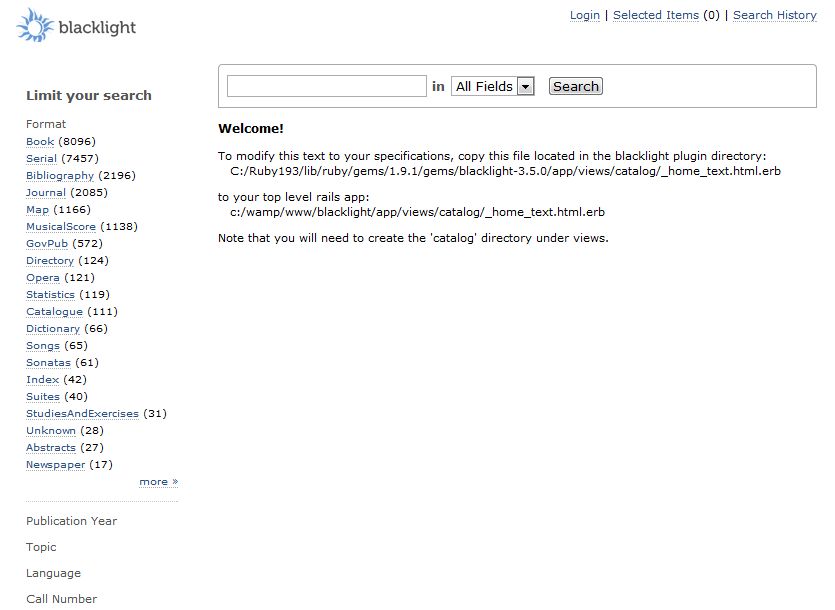

In my previous post on MaRC and SolrMaRC I described how SolrMaRC could be used, as part of Blacklight, VuFind or other discovery layers, to create indexes from various parts of the MaRC record. My question to those at Mashcat was “what would you do, or like to do, with these capabilities” – I was especially interested in how you might be able to offer better search experiences for specific types of materials – what we talked about in the pub afterwards as ’boutique catalogues’.

During the Mashcat session I asked the audience what types of materials/collections it might be interesting to look at with this in mind. In the session itself we came up with three suggestions – so I thought I’d capture these here, and maybe try to start working out what the SolrMARC index configuration and (if necessary) related scripts might look like. I’d hoped to take advantage of having a lot of cataloguing knowledge in the room to drill into some of these ideas in detail, but in the end – entirely down to me – this didn’t happen. Looking back on it I should have suggested breaking into groups to do this so that people could have discussed the detail and brought it back together – next time …

Please add suggestions through the comments of other ’boutique catalogue’ ideas, additional ideas on what indexes might be useful and what the search/display experience might be like:

(Born) Digital formats

Main suggestion – provide a ‘file format’ facet

If 007/00 = c, then it’s an electronic resource and there’s information to dig out of 007/01-13

Might be worth looking at 347$$a$$b (as well as other subfields)

Would it be possible to look up file formats on Pronom/Droid and add extra information either when indexing or in the display?

Rare books

Main suggestion – use ‘relators’ from the added entry fields – specifically 700$$e. For rare books these can often contain information about the previous owners of the item, which can be of real interest to researchers.

I’d asked a question about indexing rare books previously on LibCatCode – see http://www.libcatcode.org/questions/42/indexing-rare-book-collections. I suspect it might be worth re-asking on the new Stack Exchange site for Libraries and Information Science

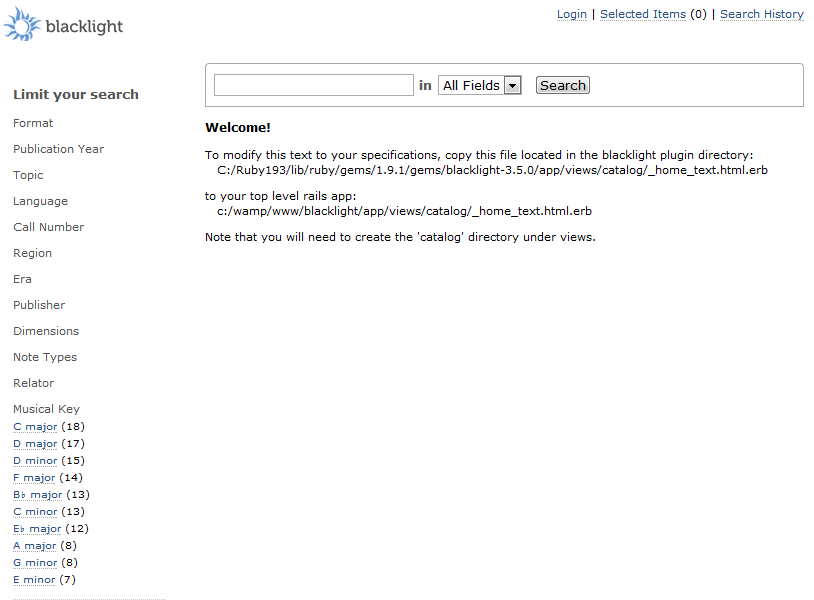

Music

Main suggestions – created faceted indexes on the following:

- Key

- Beats per minute

- Time signature

I’m keen on the music idea – MARC isn’t great for cataloguing music in the first place, and much useful information isn’t exposed in generic library catalogue interfaces, so I had a quick look at where ‘key’ might be stored – it turns out in quite a few places – I started putting together an expression of this that could be dropped into a SolrMaRC index.properties file:

musicalkey_facet = custom, getLinkedFieldCombined(600r:240r:700r:710r:800r:810r:830r)

I’m not sure if you actually need to use the ‘getLinkedFieldCombined’ (probably not). I’m also not sure I’ve got all the places that the key can be recorded explicitly – $$r for musical key appears in lots of places.

What I definitely do know is although 600$$r etc can be used to record the musical key, it might appear as a text string in the 245, or possibly a notes (500, 505, etc.) field. Whether it is put explicitly in the 600$$r (or other $$r) will depend on local cataloguing practice. I’m guessing that it might be worth writing a custom indexing script that uses regular expressions to search for expression of musical key in 245 and 5XX fields – although it would need to cover multiple languages (I’d say English, German and French at least).

I haven’t looked at Beats per minute or Time signature and where you might get that from. It seems obvious that also getting information on what instruments are involved etc. would be of interest.

In fact there has already been some work on a customised Blacklight interface for a Music library – mentioned at https://www.library.ns.ca/node/2851 – although I can’t find any further details right now (and I don’t have access to the Library Hi-Tech journal). If the details of this are published online anywhere I’d be very interested. Also the example of building an index of instruments is one of the examples in the SolrMaRC wiki page on the index.properties file.

Perhaps a final word of caution on all this – you can only build indexes on this rich data if it exists in the MaRC record to start with. The MaRC record can hold much more information than is typically entered – and some of the fields I mention in the examples above may not be commonly used – and either the information isn’t recorded at all, or you would have to write scripts to extract it from notes fields etc. The latter, though painful, might be possible; but in the former case, there is nothing you can do…