This is a slightly delayed final post on my Will Hack entry – which I’m really happy to say won the “Best Open Hack” prize in the competition.

I should start by acknowledging the other excellent work done in the competition, with special mention of the overall winner, a ‘second screen’ app to use when watching a Shakespeare play by Kate Ho and Tom Salyers. Also the team behind the whole Will Hack event at Edina – Muriel, Nicola, Neil and Richard – the idea of an online hackathon was great, and I hope they’ll be writing up the experience of running it.

I presented my hack as part of the final Google+ Hangout and you can watch the video on YouTube. Here I’ll describe the hack, but also reflect on the nature and potential of the Will’s World Registry which I used in the hack.

The Will’s World Registry and #willhack are part of the Jisc Discovery Programme – which is a programme I’ve been quite heavily involved in. The idea of an ‘aggregation’ which brings together data from multiple sources (which is what the Will’s World Registry does) was part of the original Vision document which informed the setting up of the Discovery programme. When I sat down to start my #willhack, I really wanted to see if the registry fulfilled any of the promise that the Vision outlined.

The Hack

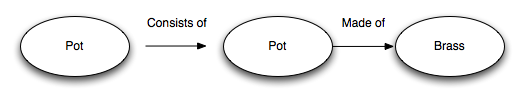

When I started looking at the registry, it was difficult to know what I should search for – what data was in there? what searches would give interesting results? So I decided that rather than trying to construct a search service over the top of the registry (which uses Solr and so supports the Solr API – see the Querying Data section in this tutorial), I’d see if I could extract relevant data from the plays (e.g. names of characters, places mentioned etc.) and use those to create queries for the registry and return relevant results.

It seemed to me this approach could provide a set of resources alongside the plays – a starting point for someone reading the play and wanting more information. As I’ve done with previous hacks I decided that I’d use WordPress as a platform to deliver the results, and so would build the hack as a WordPress plugin. I did consider using Moodle, the learning management system, instead of WordPress, as I wanted the final product to be something that could be used easily in an educational context – however in the end, I went with WordPress as having a larger audience.

The first thing I wanted to do was import the text of the plays into a WordPress install, and then start extracting relevant keywords to throw at the registry. This ended up being a lot more time consuming than I expected. I got the basics of downloading a play in xml (I used the simple xml provided by the Will’s World team (http://wwsrv.edina.ac.uk/wworld/plays/index) and creating posts quite quickly. However, it turned out my decision to create one WP post per line of dialogue was an expensive one – creating posts in WP seems to be quite a slow process, and so it would take minutes to load a play. This in turn led to timeout issues – the web server would timeout while waiting for the php script to run. It took me some considerable time to amend the import process to import only one act at a time based on the user pressing a ‘Next’ button. The final result isn’t ideal but it does the job – to improve this would take a re-write of the code and inserting posts directly using SQL rather than the pre-packaged ‘insert post’ function provided by WordPress. I also realised very late on in the hack that my own laptop was a hell of a lot faster than my online host – and I could have avoided these issues altogether if I’d done development locally – but then I guess that would have detracted from the ‘open’ aspect I won a prize for!

I also spent some time working out how to customise WordPress to display the Play text in a useful way. I drew on some experience of developing ‘multi faceted documents’ in WordPress – although I didn’t go quite as far as I would have liked. I also benefited from using WordPress and having access to existing WordPress plugins. For example – putting up a tag cloud immediately gives you information on which characters in the play have the most lines (as I automatically tagged each post with the speaking character name, as well as the Act and Scene it was in)

As I developed I posted all the code to Github, and kept an online demonstration site up to date with the latest version of the plugin.

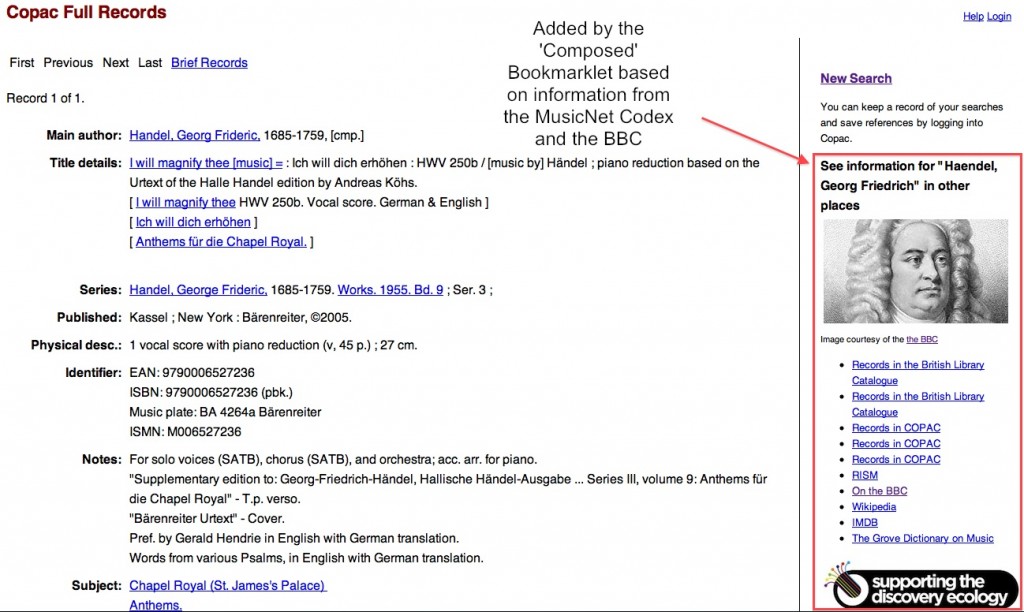

I now had a working plugin that imported the play and displayed it in a useful way. So I was ready to go back to the purpose of the hack – to draw data out of the registry. Earlier when I’d been looking for ideas of what to do I’d also created a data store on ScraperWiki of cast lists from various productions of Shakespeare plays and so an obvious starting point was a page per character in the play that would display this information, plus results from the registry.

I started to put this together (unfortunately as you can see in the tag cloud, the character names I got from the xml are actually ‘CharIDs’ and have had white space removed – meaning that while this approach works well for ‘Beatrice’ it fails for ‘Don Pedro’ which is converted to ‘DONPEDRO’. I could solve this either by using a different source for the plays, or by linking with the work Richard Light did for #willhack where he established URIs for each character with their real name and this CharID from the xml. (I also threw in some schema.org markup into each post – this was a bit of an after-thought and I’m not sure if it is useful, or indeed is marked up correctly!)

I found immediately that just throwing a character name at the registry didn’t return great results (perhaps not suprisingly) but combining the character name with the name of the play was not bad generally. Where it tended to provide less interesting results was where the character name was also in the name of the play – for example searching “Romeo AND Romeo and Juliet” doesn’t improve over just searching for “Romeo and Juliet” – and the top hits are all versions of the play from the Open Library data – which is OK, but to be honest not very interesting.

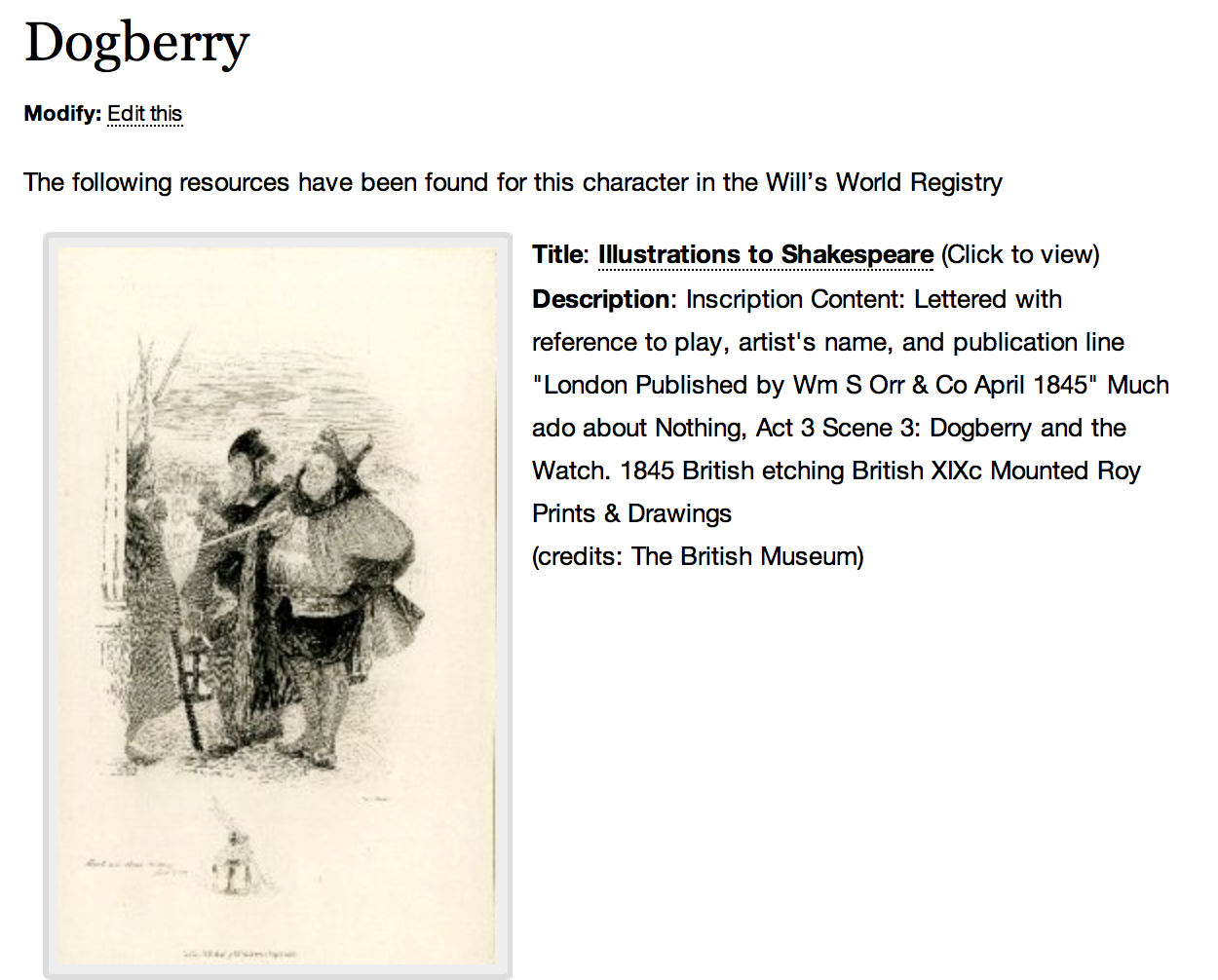

However, at its best, quite a basic approach here creates some interesting results, such as this one for the character of Dogberry from Much Ado About Nothing:

and this interesting snippet from the Culture Grid

The British Museum data proved particularly rich having many images of Shakespearean characters.

I finished off the hack with creating a ‘summary’ page for the play, which tries to get a summary of the play via the DuckDuckGo API (in turn this tends to get the data from Wikipedia – but the MediaWiki API seemed less well documented and harder to use). It also tries to get a relevant podcast and epub version of the play from the Oxford University “Approach Shakespeare” series.

Once the posts and pages have been created they are static – they are created on the fly, but don’t update at all. This means that the blog owner can then edit them as they see fit – maybe adding in descriptions of the characters, or annotating parts of the play etc. All the usual WordPress functionality is available so you could add more plugins etc. (although the play layout depends on you using the Theme that is delivered as part of the plugin).

I think this could be a great starting point for creating a resource aimed at schools – a teacher gets a great starting point, a website with the play text and pointers to more resources. I hope that illustrations of characters, and information about people who have played them (especially where that’s a recognisable name like Sean Bean playing Puck) bring the play to life a bit and give some context. It also occurred to me that I could create some ‘plan a trip’ pages which would present resources from a particular collection – like the British Museum – in a single page, pointing at objects you could look at when you visited the museum.

You can try the plugin right now – just setup a clean WordPress install, and download the ShakespearePress plugin from Github, drop it into the ‘plugins’ directory, install it via the WP admin interface, then go to the settings page (under the settings menu) – and it will walk you through the process. All feedback very welcome. You can also browse a site created by the plugin at http://demonstrators.ostephens.com/willhack.

I was going to comment on my experience of using the Registry here, but I’ve already gone on too much – that will have to be a separate post!