[UPDATE 2014-11-20: The British Museum data model and has changed quite a bit since I wrote this. While there is still useful stuff in this post, the detail of any particular query, or comment may well now be outdated. I’ve added some updates in square brackets in some cases]

In September 2011 the British Museum started publishing descriptions of items in its collections as RDF (the data structure that underlies Linked Data). The data is available from http://collection.britishmuseum.org/ where the Museum have made a ‘SPARQL Endpoint’ available. SPARQL is a query language for extracting data from RDF stores – it can be seen as a parallel to SQL, which is a query language for extract data from traditional relational databases.

Although I knew what SPARQL was, and what it looked like, I really hadn’t got to grips with it, and since I’d just recently purchased “Learning SPARQL” it seemed like a good opportunity to get familiar with the British Museum data and SPARQL syntax. So I had a play (more below). Skip forward a few months, and I noticed some tweets from a JISC meeting about the Pelagios project (which is interested in the creation of linked (geo)data to describe ‘ancient places’), and in particular from Mia Ridge and Alex Dutton which indicated they were experiementing with the British Museum data. My previous experience seemed to gel with the experience they were having, and prompted me to finally get on with a blog post documenting my experience so hopefully others can benefit.

Perhaps one reason I’ve been a bit reluctant to blog this is that I struggled with the data, and I don’t want this post to come across as overly critical of the British Museum. The fact they have their data out there at all is amazing – and I hope other museums (and archives and libraries) follow the lead of the British Museum in releasing data onto the web. So I hope that all comments/criticisms below come across as offering suggestions for improving the Museum data on the web (and offering pointers to others doing similar projects), and of course the opportunity for some dialogue about the issues. There is also no doubt that some of the issues I encountered were down to my own ignorance/stupidity – so feel free to point out obvious errors.

When you arrive at the British Museum SPARQL endpoint the nice thing is there is a pre-populated query that you can run immediately. It just retrieves 10 results, of any type, from the data – but it means you aren’t staring at a blank form, and those ten results give a starting point for exploring the data set. Most URIs in the resulting data are clickable, and give you a nice way of finding what data is in the store, and to start to get a feel for how it is structured.

For example, running the default search now brings back the triple:

Which is intriguing enough to make you want to know more (I am married, and have to admit I don’t remember any special equipment). Clicking on the URI http://collection.britishmuseum.org/id/object/EAF119772 in a browser takes you to an HTML representation of the resource – a list of all the triples that make statements about the item in the British Museum identified by that URI.

While I think it would be an exaggeration to say this is ‘easily readable’, sometimes, as with the triple above, there is enough information to guess the basics of what is being said – for example:

From this it is perhaps easy enough to see that there is some item (identified by the URI http://collection.britishmuseum.org/id/object/EAF119772) which has a note related to it stating that it was acquired (presumably by the museum) in 1994.

So far, so good. I’d got an idea of the kind of information that might be in the database. So the next question I had was “what kind of queries could I throw at the data that might produce some interesting/useful results?” Since I’d recently been playing around with data about composers I thought it might be interesting to see if the British Museum had any objects that were related to a well-known composer – say Mozart.

This is where I started to hit problems…. In my initial explorations, while some information was obvious, I’d also realised that the data was modelled using something called CIDOC CRM, which is intended to model ‘cultural heritage’ data. With some help from Twitter (including staff at the British Museum) I started to read up on CIDOC CRM – and struggled! Even now I’m not sure I’d say I feel completely on top of it, but I now have a bit of a better understanding. Much of the CIDOC model is based around ‘events’ – things that happened at a certain time/in a certain place. This means that often what might seem like a simple piece of information – such as where a item in the museum originates from – become complex.

To give a simple example, the ‘discovery’ of an item is a kind of event. So to find all the items in the British Museum ‘discovered’ in Greenwich you have to first find all the ‘discovery’ events that ‘took place at’ Greenwich, then link these discovery events back to the items they are a related to:

An item -> was discovered by a discovery event -> which took place at Greenwich

This adds extra complexity to what might seem initially (naively?) a simple query. This example was inspired by discussion at the Pelagios event mentioned earlier – the full query is:

SELECT ?greenwichitem WHERE

{

?s <http://collection.britishmuseum.org/id/crm/P7F.took_place_at> <http://collection.britishmuseum.org/id/thesauri/x34215> .

?subitem <http://collection.britishmuseum.org/id/crm/bm-extensions/PX.was_discovered_by> ?s .

?greenwichitem <http://collection.britishmuseum.org/id/crm/P46F.is_composed_of> ?subitem

}

and the results can be seen at http://bit.ly/vojTWq.

[UPDATE 2014-11-20: This query no longer works. The query is now simpler:

PREFIX ecrm: <http://erlangen-crm.org/current/>

SELECT ?greenwichitem WHERE

{

?find ecrm:P7_took_place_at <http://collection.britishmuseum.org/id/place/x34215> .

?greenwichitem ecrm:P12i_was_present_at ?find

}

END UPDATE]

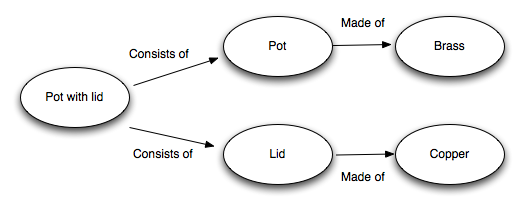

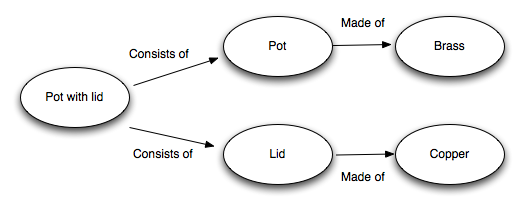

To make things even more complex the British Museum data seems to describe all items actually as made up of (what I’m calling) ‘sub-items’. In some cases this makes some sense. If a single item is actually made up of several pieces, all with their own properties and provenance, it clearly makes sense to describe each part separately. Each part of the object will have it’s own properties and provenance, and it makes sense to describe these separately.

However, the British Museum data describes even single items as made up of ‘pieces’ – just that the single item consists of a single piece – and it is then that piece that has many of the properties of the item associated with it. To illustrate. A multi-piece item is like:

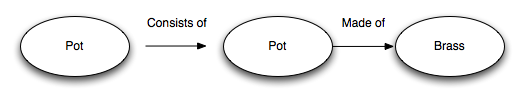

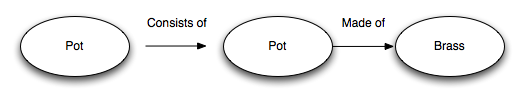

Which makes sense to me. But a single piece item is like:

Which I found (and continue to find) this confusing. This isn’t helped in my view by the fact that some properties are attached the the ‘parent’ object, and some to the ‘child’ object, and I can’t really work out the logic associated with this. For example it is the ‘parent’ object that belongs to a department in the British Museum, while it is the ‘child’ object that is made of a specific material. Both the parent and child in this situation are classified as physical objects, and this feels wrong to me.

Thankfully a link from the Pelagios meeting alerted me to some more detailed documentation around the British Museum data (http://www.researchspace.org/Stage-2-Outputs), and this suggests that the British Museum are going to move away from this model:

Firstly, after much debate we have concluded that preserving the existing modelling relationship as described earlier whereby each object always consists of at least one part is largely nonsense and should not be preserved.

While arguments were put forward earlier for retaining this minimum one part per object scheme, it has now been decided that only objects which are genuinely composed of multiple parts will be shown as having parts.

The same document notes that the current modelling “may be slightly counter-intuitive” – I can back up this view!

So – back to finding stuff related to Mozart… apart from struggling with the data model, the other issue I encountered was that it was difficult to approach the dataset through anything except a URI for a entity. That is to say, if you knew the URI for ‘Wolfgang Amadeus Mozart’ in the museum data set, the query would be easy, but if you only know a name, then it is much more difficult. How could I find the URI for Mozart, to then find all related objects?

Just using SPARQL, there are two approaches that might work. If you know the exact (and I mean exact) form of the name in the data, you can query for a ‘literal’ – i.e. do a SPARQL query for a textual string such as “Mozart, Wolfgang Amadeus”. If this is the exact for used in the data, the query will be successful, but if you get this slightly wrong then you’ll fail to get any result. A working example for the British Museum data is:

SELECT * WHERE

{

?s ?p "Mozart, Wolfgang Amadeus"

}

The second approach you can use is to do a more general query and ‘filter’ the result using a regular expression. Regular expressions are ways of looking for patterns in text strings, and are incredibly powerful (supporting things like wildcards, ignoring case etc. etc.). So you can be a lot less precise than searching for an exact string, and for example, you might try to retrieve all the statements about ‘people’ and filter for those containing the (case insensitive) word ‘mozart’. While this would get you Leopold Mozart as well as Wolfgang Amadeus if both are present in the data, there are probably a small enough number of mozarts that you would be able to pick out WA Mozart by eye, and get the relevant URI which identifies him.

A possible query of this type is:

SELECT * WHERE

{

?s <http://xmlns.com/foaf/0.1/Name> ?o

FILTER regex(?o, "mozart", "i")

}

Unfortunately these latter type of ‘filter’ queries are pretty inefficient, and the British Museum SPARQL endpoint has some restrictions which mean that if you try to retrieve more than a relatively small amount of data at one time you just get an error. Since this is essentially how ‘filter’ queries work (retrieve a largish amount of data first, then filter out the stuff you don’t want), I couldn’t get this working. The issue of only being able to retrieve small sets of data was a bit of a frustration overall with the SPARQL endpoint, not helped by the fact that it seemed to be relatively arbitrary in terms of what ‘size’ of result set caused an error – I assume it is something about the overall amount of data retrieved, as it seemed unrelated to the actual number of results retrieved – for example using:

SELECT * WHERE

{

?s ?p ?o

}

You can retrieve only 123 results before you get an error, while using

SELECT ?s WHERE

{

?s ?p ?o

}

You can retrieve over 300 results without getting an error.

This limitation is an issue in itself (and the British Museum are by no means alone in having performance issues with an RDF triple store), but it is doubly frustrating that the limit is unclear.

The difficulty of exploring the British Museum data from a simple textual string became a real frustration as I explored the data – it made me realise that while the Linked Data/RDF concept of using URIs and not literals is something I understand and agree with, as people all we know is textual strings that describe things, so to make the data more immediately usable, supporting textual searches (e.g. via a solr index over the literals in the data) might be a good idea.

I got so frustrated that I went looking for ways of compensating. The British Museum data makes extensive use of ‘thesauri’ – lists of terms for describing people, places, times, object types, etc. In theory these thesauri would give the text string entry points into the data, and I found that one of the relevant thesauri (object types) was available on the Collections Link website (http://www.collectionslink.org.uk/assets/thesaurus/Objintro.htm). Each term in this data corresponds to a URI in the British Museum data, and so I wrote a ScraperWiki script which would search for each term in the British Museum data and identify the relevant URI and record both the term and the URI. At the same time a conversation with @portableant on twitter alerted me to the fact that the ‘Portable Antiquities‘ site uses a (possibly modified) version of the same thesaurus for classifying objects, so I added in a lookup of the term on this site to start to form connections between the Portable Antiquities data and the British Museum data. This script is available at https://scraperwiki.com/scrapers/british_museum_object_thesaurus/, but comes with some caveats about the question of how up to date the thesaurus on the Collections Link website is, and the possible imperfections of the matching between the thesaurus and the British Museum data.

Unfortunately it seems that this ‘object type’ thesaurus is the only one made publicly available (or at least the only one I could find), while clearly the people and place thesauri would be really interesting, and provide valuable access points into the data. But really ideally these would be built from the British Museum data directly, rather than being separate lists.

So, finally back to Mozart. I discovered another way into the data – which was via the really excellent British Museum website, which offers the ability to search the collections via a nice web interface. This is a good search interface, and gives access to the collections – to be honest already solving problems such as the one I set myself here (of finding all objects related to Mozart) – but nevermind that now! If you search this interface and find an object, when the you view the record for the object, you’ll probably be at a URL something like:

http://www.britishmuseum.org/research/search_the_collection_database/search_object_details.aspx?objectid=3378094&partid=1&searchText=mozart&numpages=10&orig=%2fresearch%2fsearch_the_collection_database.aspx¤tPage=1

If you extract the “objectid” (in this case ‘3378094’) from this, you can use this to look up the RDF representation of the same object using a query like:

SELECT * WHERE

{

?s <http://www.w3.org/2002/07/owl#sameAs> <http://collection.britishmuseum.org/id/codex/3378094>

}

This gives you the URI for the object, which you can then use to find other relevant URIs. So in this case I was able to extract the URI for Wolfgang Amadeus Mozart (http://collection.britishmuseum.org/id/person-institution/39629) and so create a query like:

SELECT ?item WHERE

{

?s ?p <http://collection.britishmuseum.org/id/person-institution/39629> .

?item <http://collection.britishmuseum.org/id/crm/P46F.is_composed_of> ?s

}

To find the 9 (as of today) items that are in someway related to Mozart (mostly pictures/engravings of Mozart).

The discussion at the Pelgios meeting identified several ‘anti-patterns’ related to the usability of Linked Data – and some of these jumped out at me as being issues when using the British Museum data:

Anti-patterns

- homepages that don’t say where data can be found

- not providing info on licences

- not providing info on RDF syntaxes

- not providing egs of query construction

- not providing easy way to get at term lists

- no html browsing

- complex data models

One thing I felt strongly when I was looking at the British Museum data is that it would have been great to be able to ‘go’ somewhere that others looking at/using the data would also be to discuss the issues. The British Museum provide an email to send feedback (which I’ve used), but what I wanted to do was say things like “am I being stupid?” and “anyone else find this?” etc. As a result of discussion at the Pelagios meeting, and on twitter,

Mia Ridge has setup

a wiki page for just such a discussion.